| Note: To follow this tutorial you need to have the pr2_interactive_segmentation package installed and the Kinect device with the camera_openni driver. This tutorial assumes that you have completed the previous tutorials: ROS tutorials. |

| |

Interactive segmentation with the Kinect

Description: This tutorial explains how to segment textured objects in a clutter using a novel trajectory clustering algorithm and a Kinect sensor.Tutorial Level: INTERMEDIATE

Preparing the scene

Prepare a scene like the one pictured below. Obviously, you can prepare another scene, however, if you want to see the main power of the algorithm it is important that the scene is cluttered.

Preparing the Kinect

Put the Kinect in the position where all of the objects can be observed. An example is given below. The background is not important in this case as we are extracting the features only from the objects that moved.

Preparing the software

We have to run the Kinect first. To run the driver use:

roslaunch openni_camera openni_node.launch

Make sure that the scene is seen by the Kinect sensor. In order to do this you can type in:

rosrun image_view image_view image:=/camera/rgb/image_color

We want to be sure that we run the code in a good configuration. Therefore go to the package and open the main cpp file in a text editor:

roscd interactive_segmentation_textured/src; gedit c_track_features_with_grasping.cpp

If not already so, change the beginning of the file to look like this:

Make the package using the command:

rosmake interactive_segmentation_textured

Running the code

In order to run the code type:

rosrun interactive_segmentation_textured c_track_features_with_grasping

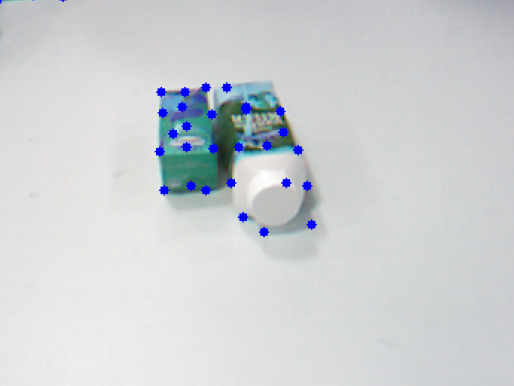

After that an OpenCv window will pop up and you should see the image with extracted Shi-Tomasi features, similar to the one below:

After that press spacebar so that the dots become bigger.

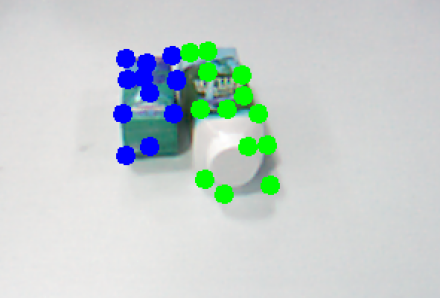

Now you have to interact with the objects. Therefore push one of the objects or both of them for about 1cm and then press spacebar once again. The algorithm will track the features, randomly sample them and estimate which of them are moving rigidly with respect to each other. You can repeat the push-cluster process a couple of times and observe how the clustering eveloves. This is the result after two pushes for the presented scene:

Obviously, you can think of a much complicated scene with many objects, textured background and push all of them at the same time. The algorithm should also be able to handle this situation.